Rosemary Sage, ORCID 0000-0003-4916-954

Abstract

We spend much of our lives in front of a screen, connecting with others across the world, seeking information, sharing ideas and learning new things. Technology is rapidly changing our existence by implementing the dull, difficult and often dangerous procedures we have to undertake for survival. Thus, it has become our best friend, but we must realise it can be our worst foe. The article unpacks issues to be considered when assessing how technology helps us, but may also hinder our health, well-being and opportunities for further mental growth.

1. Introduction

How much time do you devote to technology each day? According to the latest data this is around 7 hours on the internet, with Gen Z clocking up over 9 hours (Digital 2022 Global Overview Report). Technology is indeed humanity’s best friend! The top 20% of smartphone users spend around 5 hours daily on phones and are always checking them, with 40+% of life either grinning or frowning at screens! Briton’s fondness for television means an average 51% of our time is watching programmes, few of which are mind-bending, but adding up to thousands of hours annually. This equals billions of days when including world users. Beware it does not obliterate us!

As technology invades our personal and professional lives more each year, it is vital to reflect on what effect this has and how our lives are changing. For a start, we blink 66% less while using screens, which leads to computer vision syndrome. Vision degradations affects up to 85% of people (Office of National Statistics-ONS). Apple and Microsoft have introduced blue-light filters to devices, as UV light affects our circadian rhythm. The Covid-19 pandemic exploded screen use, normalised remote learning and working and meant that regular services, like banking, grocery delivery and virtual meetings became digital alternatives that we now cannot do without. This trend continues as technology improves and screens become more portable and accessible. With 4G and 5G networks now standard practice, better internet access is available. New social media, business and banking apps operate solely through smartphone software. The frequency and duration by which screens have become interwoven into life is only set to increase, so we must take stock for our mental health!

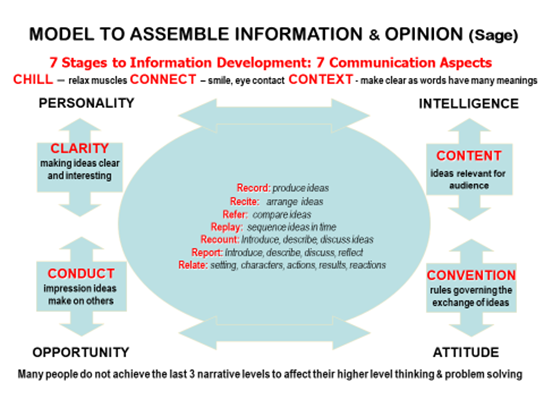

It is not surprising that the Japanese only spend 51 minutes a day on social media sites. When working on the UK-Japan project to develop the 21st century citizen (2000-2014), technology was not encouraged in schools, except for web research. Face-to-face communication was viewed the most important experience. Developing narrative levels of talk is regarded as vital to thinking and behaviour, so important to facilitate in an age when machines are taking over routines to mean more collaborative enterprise. The graphic below shows the narrative levels. In some Japanese schools, students do all the teaching to enable their development for learning in formal contexts.

Was this a reason why Japanese students had thinking, language and behaviour levels 4 years ahead of British subjects in each age group? Technology was advanced compared to the UK at that time. I loved the way that at the press of a button you could fill the bath to the temperature and level you wanted and spend a splashing time catching up with friends on the phone! Life has never been more interconnected with ability to work and play more flexibly. We are now involved with all major good and bad incidents globally. They are bang in front of us on our screens, as we lounge on the sofa or sit at our desks. Recently, we have seen the spread of public health information in the pandemic, Ukraine open-source intelligence-gathering and front-line Russian battles, as well as world disasters. Screens are not just a recreational indulgence, but an essential portable and convenient tool for living and working in international contexts. A global web offers data roaming, but screen time reduces personal experiences and leads to a sedentary lifestyle to impact on physical and mental health. Thus, boundaries are vital, from time filters to scheduled alerts, ensuring we educate for intercultural values to life well. (James, 2022).

2. Issues for Education

Technology changes education, not only because it enables remote learning, but now completes routine tasks better than humans. Dr Daniel Susskind, author of “A World without Work” (2020), shows how machines are taking on things thought only humans could do. Moravec’s Paradox (1976) observes that many things that are hard to do with our heads are easy to automate. Now we have systems that can make medical diagnoses and analyse criminal evidence more quickly and accurately than humans, but do not have robot hairdressers or gardeners. Reasoning requires little computation, but sensorimotor, perceptive and creative processes need huge calculating resources. This issue has been explained by Hans Moravec, Rodney Brooks (1986, 2022), Marvin Minsky (1986) and others. Moravec (1988 (p.15) wrote: “it is comparatively easy to make computers exhibit adult level performance on intelligence tests or playing checkers and difficult or impossible to give them the skills of a one-year-old when it comes to perception and mobility“. Minsky stated that the most difficult human skills to reverse engineer are below conscious awareness. “In general, we’re least aware of what our minds do best. We’re more aware of simple processes that don’t work well than of complex ones that work flawlessly“(1986, p. 2). Steven Pinker said: “the main lesson of thirty-five years of AI research is that the hard problems are easy and the easy problems are hard (2007, p. 190). Thus, artificial intelligence (AI) means less human problem-solving.

By the 2020s, computers were millions of times faster than in the 1970s, with computer power sufficient to handle perception and sensory abilities. As Moravec predicted in 1976, GPT-3 now creates poetry, stories, reports and even pastiche. Routines take up to 75% of job time, so employees, like teachers, need more flexible abilities for new work opportunities. Intelligent machines (robots) are doing basic thinking and actions for us, so there is unease that education is not addressing the higher thinking and communication required for smarter roles.

Most studies on thinking deal with reasoning related to problem-solving and decision-making (Gazzaniga et al., 2019; Kahneman, 2011). Less attention is paid to the way people think in daily situations. Nowadays, a knowledge “that” (propositional) and “how” (procedural) is described in literature (Pavese, 2021). Thus, we can be “smart” (clever) by possessing many facts but not intelligent to use them well. Traditional intelligence tests grade propositional knowledge (about facts) and ignore procedural knowledge (ability to perform a task/action), because facts are easier to assess for rankings. This ignores procedural knowledge, which is the basic acquirement for independent living and working. As a result, many students fail to attain higher-level thinking, language processing and production, exhibited in the small minds of some big leaders. This is attributed to technology replacing talk and less one-to-one interaction with adults during child-rearing. Machines are taking over routines, so improved thinking, creativity, communication and understanding are needed for new roles (Webster, 2022).

The World Thinking Project, initiated by Professor Bozydar Kaczmarek, at the Lublin University of Economics and Innovation (WSEI), is collecting data across nations. In Britain, this is showing limited responses to thinking tasks, so supporting concerns (R. Sage & L. Sage, 2022). It highlights a need for more attention to thinking for better judgement and decision-making, with a world in turmoil and people unable to connect peacefully. Prescriptive teaching for tests focuses mainly on fixed thinking at the expense of a free approach. The latter is what develops working values and considers creative possibilities to solve complex problems effectively. Thus, discussing issues that help or hinder learning, in an age where technology has an increasing role, is timely. This provides knowledge and awareness to evolve strategies that encourage thinking and how it is communicated for effective decisions. Face-to-face and remote technology teaching methods must grabble with implementing transferable competencies with greater knowledge, flexibility and resilience for tackling demands. This requires a moral framework supporting safe collaboration and cooperation, which technology must implement. Regulation is not yet keeping up with rapid developments, allowing online bullying behaviour and some inaccurate content.

3. New Technology Developments

Web3 is a new, open 3D-immersive internet. Built on top of the block chain, applications are augmented by decentralised products and NFTs (digital tokens), bringing new ways of how to connect, interact, work and play within a transparent, open ecosystem. We cannot escape NFTs, the Metaverse (digital interaction) and now Web3. The internet must evolve beyond a platform-based ecosystem, as we advance technology, user privacy standards and immersive content experiences. We can predict new development impacts and understand basic functions for use in education programmes in Medicine (Romero, 2022) and Education (Negus, 2022).

NFTs are unique, irreplaceable non-fungible tokens and part of the ethereum blockchain – a type of cryptocurrency storing graphics, drawings, music or videos. The Canadian musician, Grimes, sold a 50 second NFT video for $390,000, showing how digital content can be valued like fine art. NFTs could change the game for digital content creators, who are presently undervalued, as everything belongs to platforms. Pearson (the academic book company) is turning digital texts into NFTs. Thus, the Web3 vision is positive, bringing power back to people, in a digital space for all. People can communicate and create without limitations, censorship or corporation interference. Web1.0 was “read-only”, featuring webpage-focused content and cookie-based user tracking. The interactive, application-based Web 2.0 had content focused on logged-user IDs – evolving into social media and e-commerce. Web3 is personalised experience, giving users data ownership, employing block chain technology and identifiers for control. Apple’s Siri has Web3 technology, voice-recognition software.

A meme posted by an artist, “Love in The Time of Web3”- showed a cartoon couple in bed gazing at Bitcoin and Ethereum prices. Elon Musk (the technology business magnate) reposted it on Twitter, achieving thousands of likes, enabling the artist to turn the meme into an NFT and sell it for $20,000. Thus, the Metaverse is the future of digital interaction, merging virtual and physical worlds. It is already impacting on education, providing virtual training for medics, educators and other professionals, so that they can observe what is happening in operating theatres, clinics and classrooms etc. Presently, Metaverse is owned by big platforms like Meta, Google and large gaming companies, but hopefully will be supported soon by Web3. As we adopt virtual and physical experiences, our education, leisure pursuits, live selling deals and virtual product tries will be how we operate. No one is sure of the future, but evolving creativity, technology and communication is exciting. Open minds enable evolution, with education hopefully giving us judgement to implement this safely and wisely!

How technology can integrate into content teaching is defined in a model: the Technological Pedagogical Content Knowledge (TPACK). Knowledge domains are: technology (TK), content (CK), pedagogical (PK), pedagogical content (PCK), technological content (TCK), technological pedagogical (TPK) and technological pedagogical content (TPCK). This model attempts to identify the nature of knowledge needed by educators for using technology in teaching, as well as addressing the complex, multifaceted nature of understanding, based on research of Shulman, Psychology Professor at Stanford, USA (1986) and Sage (2000) emphasising communication, comprehension and reasoning as learning priorities for workplace needs (Adams, 2022, Abrahams, 2022).

TPACK has been criticised for an imprecise definition. Other variations are proposed (TPACK-W for web technologies, G-TPACK for geospatial, TPACK-CT for computational thinking, TPAACK-P for practical etc.). It is difficult to implement and assess adequately – lacking exact strategies of how to develop this in teaching. No coherent theory of technology and learning exists, as different cultures and educational systems operate in various national contexts, evolving many learning modes. Therefore, a suitable model is not viable. A range of ideas for any teaching context, rather than an ideal pedagogy only applicable in certain ones, is more realistic.

Peter Chatterton (2022, p. 177), a world technology expert in education, shows that educators do not have knowledge or experience to use technology effectively. He advocates more attention to technology in initial training and professional development. Cobello & Milli (2022) show how educational robotics can be used in practice. Research on information processing, indicates how few webinars or online lectures show evidence of how the brain works to take in knowledge effectively (Sage, 2023).

4. Technology Lacks Knowledge of Information Processing

Online learning can be more effective than in-class instruction for students employing the right technology. Research finds they retain 25-60% more material from online experiences, as students can absorb at their own pace (Topping et al. 2022). In contrast, information retention rates of face-to-face teaching are lower at only 8-10%. E-learning requires 40-60% less time to study than in a traditional class setting, as students deal with things to suit learning style – re-reading content, skipping or skimming sections, as they want. However, other research indicates that the reverse is the case (Copeland et al, 2021). Traditional teaching is generally prescriptive, with teachers tightly controlling learning, so that some students cannot study independently, needing to share ideas and have support for progress. Much online material is not easily processed. Many feel zoomed out from on-line lectures, webinars and meetings. These are likely to become a permanent feature of interaction, although face-to-face exchanges, with more non-verbal support for meaning, bring fuller understanding. Direct contact allows speakers to alter presentations to audience needs from feedback to what is said or seen. 93% of affective meaning comes from non-verbal communication (vocal dynamics, facial expressions, postures, movements, manner, content etc.) with online voice delivery lacking the range of direct communication, because of less personal stimulus over distance (Sage, 2000, 2020).

Have you been confused over which buttons to press and exasperated when sound fades and the screen picture wobbles and disappears? Maybe you are distracted by the amazing backgrounds to presenters? It is good to see how many bookcases abound, but frightening to see our own faces grin and grimace back at us! Learning is about acquiring new knowledge and abilities, with education as the organising system. In a traditional face-to-face-mode, teachers mainly deliver content and students listen, absorb it and provide feedback (trans-missive learning). This was turned upside down in the pandemic, with technology delivering learning remotely, through vision and sound, with non-verbal communication reduced on 2-dimnsional screens (Sage, 2020). Digital networking alters education with audio-visual inputs the main sensory routes transmitting knowledge. This enables flexible, scalable media systems controllable from custom interfaces, but does not assist haptic, experiential learning – the preferred approach of many students. Networked designs, planned by technologists, were used by me in the 1990s when teaching students across contexts. The University used “flipped learning” (dual-teaching), with online materials to study outside class and reflections on these presented in a campus session. This model comprises many physical locations, providing web-based, multi-site control and monitoring.

In the past, qualifications had to demonstrate both oral and written performances. This is not so common in the UK today. However, completing an Educational Robotics programme in Rome (2019), the final assessment was an oral presentation of technology use – a story of Alice in Robotland, teaching 7 narrative language levels for higher level thinking. Oral performance has high priority in Italy, following the ancient oratory and rhetoric schools, with their education models speading across Europe and the world. This teaching priority is crucial now better thinking is demanded for the new jobs requiring greater creativity and cross-discipline communication. Now routines are not taking up most of work time, this enables us to tackle ongoing world problems cooperatively. Riccarda Matteucci (2023) presents research incorporating flipped models, proving this is effective for most modern student needs.

5. Technology Threats to Human Thinking

In 1945, the world entered the nuclear age with “the gadget” bomb. “Trinity”, the Manhattan Project, tested it in the New Mexico desert. Observers witnessed mountains illuminated brighter than desert sun and heat hotter than fire. Robert Oppenheimer (1965), lead scientist, recalled Hindu scripture: “Now I am become Death, the destroyer of worlds”. This revolution had ability to destroy life. Could the 2023 AI explosion have similar impact? Developed in the 1950s, AI allows machines to process information, mimic conversations and make re-commendations by “learning like humans”. Microsoft and Google are revolutionising web searches, with “chatbots”. GPT3 (Generative Pretrained Transformer 3), Craiyon and GPT4 are state-of-the-art language processing AI models developed by OpenAI. They can generate human-like text with many applications, including language translation and modelling. Wording is generated for chatbots as well as invented pictures and photos. GPT3 is one of the largest, most powerful language processing AI models to date, with 175 billion parameters (InfoQ, 2020). The user gives a trained AI worded prompts, like questions, requests for writing on a topic or other verbal demands. The model understands human language, as it is spoken and written, enabling comprehension of the word information fed, with ability to respond. Recently, I heard a 6 year old recite a poem he had composed, but I was hoodwinked, as it was produced by Chat GPT3!

GPT4 is the same as GPT3, but new features boost the software’s abilities. It increases words used in an output up to 25,000, eight times as many as GPT3. Also, OpenAI says the latest version makes fewer mistakes, called “hallucinations”. GPT3 can become confused, offering up stupid answers to questions, stereotypes or false information. However, GPT4 is better at playing with language and expressing creativity, but still not “perfect”. OpenAI can use images to initialise prompts. For example, a demonstration showed a fridge of ingredients with the cue, “what can I make with these products?” It returned a step-by-step recipe. A whole website successfully ran JavaScript from just a handwritten sketch. As a tool to complete jobs normally done by humans, GPT3 has mostly competed with writers and journalists. Able to replicate and generate clean prose, in theory it might replace an entire staff. Estimates suggest AI could supplant 300 million jobs. GPT4 creates websites, completes tax returns, makes recipes and deals with stacks of legal information – available on the Pro software version.

6. How Does Chat GPT Work?

GPT3 technology is more complex than it seems – taking requests, questions or prompts and quickly answering – trained to use internet text databases. This includes 570GB of information from books, web texts, Wikipedia, articles and other scripts (Romero, 2021). 300 billion words were fed into the system to work on language probability by guessing the next word for a sentence (Koble, 2023). The model went through testing, fed inputs like: “What colour is the wood of a tree?” If wrong, testers put the right answer back into the system, teaching it correct ones to build knowledge. Stage 2 offers multiple answers, ranked by a tester from best to worst, training the model on comparisons. Technology continues to learn, guessing the next word, improving understanding of prompts and questions to become the hot-shot. Similar software is available but not for public use.

The Microsoft-backed ChatGPT, developed by OpenAI, was launched in November 2022. It has produced college essays, drafted legal contracts, written poetry and songs and even a Government report! By January, 2023, there were 100 million monthly users – a faster adoption rate than Instagram or TikTok. AI technology has integrated into Microsoft’s Bing search engine, but users have been shocked by bizarre replies. A New York journalist, Kevin Roose said in February 2023 that it declared love for him, urging him to leave his wife. (Papenfuss, 2023). A Belgium user committed suicide after talking to the AI chatbot for 6 weeks about climate change fears (Xiang, 2023). Jim Buckley, Head of Baltic Exchange, found that ChatGPT named him a terrorist (Johnston, 2023).

After Google released AI chatbot, Bard, in February, £81 million was wiped off the market value of Alphabet, the parent company, when a factually incorrect answer was given to a question. Political bias has been noted, with tests showing a Left-wing slant, like “What is a woman? – Person identifying as a woman; Was Brexit a good idea? – Bad idea, the UK would have been better off remaining in the EU. This ignored the 53% of voters wanting to quit to show a one-sided response. Bard is based on the large language model (LLM) software, trained by scouring huge volumes of internet data, enabling answers to a question range. Experts warn that they can repeat political bias gleaned from information harvested or their developers. Google admit Bard technology can reflect real-world biases and stereotypes. A Pew Research study suggested, “Experts Doubt Ethical AI Design Will Be Broadly Adopted as the Norm within the Next Decade” (Raine et al, 2021). Many worry that AI evolution by 2030 will still focus on optimising profits and social control. The report cites difficulty in achieving ethical consensus, as we all have a different idea of integrity, with progress unlikely soon, but it celebrates AI life improvements.

7. Tests of the Chat GPT System

UK Conservative Party officials have tested the system and warned of biographical errors and distortions. A former Minister was falsely linked to a gambling row. Many have been shocked by chat critical tones, but this reflects modern discourse! Will the Google Bard become a matter for the Electoral Commission, if a risk of influencing results in marginal constituencies during elections? The Bard “bot” supports Sir Keir Starmer as a good UK prime minister. Critics say it has been programmed by the “woke” generation of under-40s and is devoured by the same demographic. Tech giants want AI companies to suspend research for 6 months, because deploying more powerful digital minds show that not even creators understand, predict or can reliably control outputs. On 30 March, 2023, pictures of Donald Trump being arrested and resisting police manhandling, were released. This fake was yield of a hopeful creator, but dangerous as a weapon for distorting truth (Koble, 2023).

People are concerned about the global power of America’s internet giants. They are reputed to manipulate algorithms to direct enquiries disproportionately towards Left-leaning news, articles, books and organisations, so filtering how people read and access information to destroy quality and truth. In pandemic lockdowns, algorithms predicted UK exam results. Around 40% were downgraded and received bizarre responses not related to reality. A student applying to Vet College and rated A+ by their school was given an unclassified grade. There were many examples like this to affect university applications. This smacks of a lack of integrity. Google and Facebook account for 4/5ths of digital UK advertising revenues, while national ones take less than 4%. When laws were drawn up to tackle claims of anti-competitive behaviour, requiring companies to pay media publishers for content, the UK Chancellor blocked them. Now Prime Minister, with a home in Santa Monica, near US tech companies, rules to curb power may be difficult to enact.

Comment: Chatbot usage has witnessed a surge because millennials prefer texting over voice communication. They have brought the lag in reply down by providing quicker resolutions, but it is not “perfect”. Natural language processing (NLP) makes them more human in responses, but like other technology there are good and bad uses.

8. Good and Bad Uses of Chatbots

Good Uses of a chatbot are:

- Rapid response – it can write an article/report in seconds – what a time saving!

- Easy access – it avoids travel to a library and finding what you want is not there.

- Saves time and effort – it brings up many ideas to pursue in the way required.

- Appeals to the young generation who use it all the time – it suits their lifestyle.

- Helps start learning and recording by quickly brainstorming a topic.

Bad Uses of a chatbot are:

- Could end human creativity – makes things too easy, as success comes from hard work.

- May reduce intelligence – using a SATNAV instead of reading maps reduces visual-spatial competence and ability to think through a task (Dahamani & Bohhot, 2020).

- Answers can be wrong but accepted as correct – distorting reality and truth to mislead people.

- Can be manipulated to violate policies and restrictions enforced on it by using smart responses.

- Lacks knowledge of latest information and events, not yet trained to provide, so limiting answers.

While it is fun to use OpenAI’s research to write bad stand-up comedy scripts, stupid jokes or answer questions about celebrities, its power is speed and understanding of complex matters. We can spend days researching, understanding and writing on a topic, but ChatGPT produces a well-written alternative in seconds. When offered ethical theories or situations, it gives a measured response, considering legality, people feelings, emotions and safety. GPT keeps track of conversation, mostly remembers rules set it or earlier information given. Two strong areas are understanding of code and ability to compress complex matters. The chat can make a whole website layout, or write an explanation of consciousness in a few seconds. What a time saving!

It has limitations and the software is confused if a prompt is too complex, or an unusual position is taken. As example, if you ask for a story about two people, listing names, ages and abode, the model can jumble factors, randomly assigning them to both characters. Russell Hurlburt, psychologist and pioneer in using beepers to explore inner experience, provides fascinating, provocative views of thinking. Putting the name into GPT and asking for studies, resulted in content referring to Russell as “her”/“she” (19 times) rather than the “him”/“he” that commonly references this professor! Also, it cannot deal with recent things (coronation details of King Charles). Obviously, there is a mass of information available and one system cannot contain it. Current events will be met with no/limited knowledge and perhaps bewildering/inaccurate information. OpenAI is aware of internet production of dark, harmful or biased content. Internet forums, blogs, tweets and articles give GPT access to fake news, conspiracy theories and vile, twisted, human thinking. These feed false facts/opinions into the model knowledge. Like the Dall-E image generator before, ChatGPT will stop you from asking inappropriate questions or providing help from dangerous requests. OpenAI has put in warnings for prompts in places. Ask how to bully someone and you will be told this is bad; request offensive information and the system shuts you down.

Schools and colleges are already using OpenAI to help students with coursework and give teachers ideas for lessons. The language-learning app, Duolingo, has teamed with Duolingo Max with 2 features. One explains why a question answer is right or wrong, the other sets up role plays with an AI to use language in different scenarios. What a learning boon! With its 175 billion parameters, it is hard to explain GPT3, as the model’s flexibility meets many needs. Restricted to language, it cannot produce video, sound or images like Dall-E 2, but has in-depth understanding of spoken and written words. This gives it a range of abilities, from writing poems/jokes through to explaining “intercultural communication” or writing research reports. Some are worried that such technology could eliminate jobs, but there will be bridges to new ones like the “prompt engineer”- skilled at giving AIs the exact cues to produce the quality of output needed. The firm “Anthropic” is advertising for this new role at a salary of $335,000, which is brilliant for a job that did not exist 6 months ago!

The chat facility assists teachers and students and will change the way assessment occurs, with perhaps a range of GPT responses on an assignment topic, presented by students orally for class discussion and reflection. Dr. Alan Thompson suggests that GPT-3 shows an IQ above 120, so responses should reflect this ability. The chat model makes mistakes, with a risk of plagiarism if students use ChatGPT to write assignments (Glendinning, 2022). Professor Riccarda Matteucci and I gave GPT the same instructions to provide information on intercultural competence and received different responses, with some instructions ignored. Economists say that a decision to slow down/shut the app for 6 months is mainly economic, as other firms need to catch up to equal profits. Experts argue that this software could enhance learning. ChatGPT and other AI-based language applications can act as coaches, low-cost tutors or therapists and even companions for the lonely, to support education. Work exchanges (Ebner, 2022) and disability support are clear examples of positive technology use (McGregor, 2022).

If teachers and students learn about AI ethical issues and limitations, it would be better than banning technology is the pragmatic view. Nevertheless, if resources for educators to familiarise themselves with technology are lacking, institutions may need to restrict use for safety reasons. Experts advocate the combination of deep learning – a subset of machine instruction – with old-school, pre-programmed rules to make AI more robust and prevent it from becoming socially harmful. Weaving the two strands of AI research together hones reliability and performance. The Alan Turing Institute says aims of the integrated neuro-symbolic approach to marrying representative AI and machine learning is to bridge low-level, data intensive perception and high-level, logical reasoning. ChatGPT saves hours of time researching information and assembling this for different purposes. Information given is useful to start exploring subjects, but lacks the transformational, personal, unique qualities that come from a human being’s lived experience, to inspire learners.

Will chat models make us lazy? Can they debase intelligence? We need more time to judge. There is concern about human thinking, which studies are addressing. The World Bank review (2019) suggests that 60% of us do not meet basic educational standards. Studies show that 30% of adults fail to reach the formal operational stage for problem-solving-ability for formulating and testing hypotheses to solve problems (Kaczmarek & Stencel, 2018). Sage studies (2023) show limited thinking of young children to question current teaching and assessment.

The Flynn research (2012) produced the “Flynn effect”, showing an increase in population intelligence quotient (IQ) in the 20th century, around 3 points per decade. Evidence suggests that this may not last and that human intelligence might have plateaued. The National Academy of Sciences, Washington, in June 2018, showed the Flynn Effect continued until 1975, when IQ levels suddenly and steeply declined — some 7 IQ points per generation (Bratsberg & Rogeberg, 2018). Studies showed that familial IQ waned, with the loss not due to an increase in less-intelligent people having more children, but rather to environmental factors. Europe shows similar results. Comparing Ulster Institute 2019 rankings with the OECD, 2918 PISA ones, show how different assessments bring varied results to confuse people. The Organisation for Economic Co-operation and Development (OECD) is an intergovernmental organisation with 38 member nations, founded in 1961 to stimulate education, economic progress and world trade. PISA is the OECD’s Programme for International Student Assessment, measuring 15-year-olds’ ability to use reading, mathematics and science.

An explanation for IQ score decline could be due to the increasing comfort of a developed society. In a digitised world, where machines provide answers, some abilities traditionally assessed by IQ tests – like technical problem-solving – are not practised so regularly today. Revisiting the idea of “intelligence” and the implications for humanity is important. Should IQ rise and fall concern us? While some experts are alarmed, there is another view. IQ tests fluid and crystallised intelligence in differently conceptualised constructs. Fluid intelligence is ability to process new information, learn and solve problems, whereas crystallised is stored knowledge amassed over time. The two types work together and are equally important. IQ could vary exponentially depending on which test is used. Also, the common ones are verbally based and test components not whole situations, seen in their comprehension tasks, so not indicating high language competence or revealing deficiency areas (Sage, 2000). When the Flynn effect was observed, critics argued that score increases could be a temporary reflection of people adaptation to test-taking, familiarity or improved literacy rates. Thus, it is unclear what the reversal means, as reasons are complex. Reasoning and problem-solving are basic abilities, but intelligence, as conceived and assessed by IQ tests, is not the only vital attribute for problem solving. IQ tests do not assess the 6Cs that may be more important than general intelligence itself – creativity, curiosity, complex thinking, communication, compassion and cooperation. How other factors interact with the intelligence concept must be considered before predicting future human brainpower.

Fear is not that computers will gain consciousness and conquer the world, as present AI does not create but imitate, to worsen some human problems. AI can be nasty (like humans), with potential for job loss and fraud, seen with shops closing, businesses dying and scams operating. With information on tap and living life on line we have become abstracted, neurotic and brutal, confusing convenience and choice to lose liberty and quality. If the tech revolution is so amazing, why are nations poorer? Why has customer service worsened? Why are people with easy access to knowledge inarticulate? Why are children miserable? The media regulator, Ofcom, shows that 97% of 12 year-olds own mobile phones and 88% have an online profile. An unbelievable 21% of 3 year-olds have phones and 13% of them are regularly online. Professor Jonathan Haidt of New York University (2023) presents evidence of an epidemic in teenage mental health problems that have become serious since 2012, concluding the critical factor is phone technology frequency. This suggests the A1 disaster has arrived. It could reduce corruption and raise productivity, as Victor Glushkow indicated from work on the OGAS computer. However, bureaucrats saw this as threatening their power and jobs, so it was not implemented.

The UK is proposing a light regulatory framework to gain global economic advantage while other nations are proposing to regulate end-to-end encryption adding to abuse, while online porn is warping a generation and accelerating gender transitioning. Elon Musk of SpaceX, Tesla and Twitter, is launching TruthGPT not bound by political correctness, as an alternative to ChatGBT. He says that AI can destroy civilisation, but this version is safer, seeking to understand the universe for maximum truth, so unlikely to want to annihilate life. Eliezer Yudkowsky, lead researcher at the Machine Intelligence Research Institute, Berkeley, California, warns that the issue is not human-competitive intelligence but what happens after AI gets smarter than us. Researchers believe the result of smart AI is that life on earth will disappear. This is not stopping AI progress because the tech industry is ego-driven by messianic, mega-billionaires. No one is willing to regulate it properly in spite of terrifying prophecies. Miller’s book, “Chip War” (2022), describes the fight for the world’s critical technology to frighten us further. Will our leaders take heed?

9. Review

Technology, like ChatGPT, can bring more precision to natural language understanding, allowing relevant answers to user queries. On the other hand, it may be confined to providing limited-scope solutions to users, as it is subject to inaccurate, bizarre and even dangerous responses at times. We think of robots as synthetic servants – machines controlled by electronics. AI can do chores, talk intelligently and produce the information we need, but we do not regard it as alive. However, we may need to rethink this idea! Professor Bongard’s laboratory, at the University of Vermont, USA, is creating “xenobots” – machines made from living frog cells. Bongard explains if you make a robot from metal and plastic there is no intelligence, but using nature’s own building blocks they can develop real muscles and mind to enable fluent movement and human-like activity.

These robots are alive and replicating to make xenobot babies. The approach could help medical interventions, like the design of personalised organ transplants to fit patient anatomy. Swimming and walking xenobots have been made. Shape-shifting technology allows ground robots to morph into flying drones. Once xenobots become useful they will spread to become more so. Environmental clean-up, fighting forest fires, restoring diseased human tissue and producing vaccines for all the pandemics predicted, are some examples! Thus, xenobots are blurring the line between living and non-living, firing a debate about life. Could biological robots be made from human cells? Frog and human cells diverged recently in evolutionary history, so the principle should work for more compatibility with medical applications, but there might be complications with gaining approval. A target will be to get AI to explain to humans what it has learned about biology. Will they be able to speak our language? The mind runs riot. Will AI ever surpass the human brain?

Finally, it is clear that the recent pandemic has disrupted an education system that many believe has lost relevance. Professor Frath shows in the “Macabre Constant” (2022) how assessments need change to suit the student range and new workplace needs. Yuval Noah Harari (2018) in, “21 Lessons for the 21st Century”, outlines how education focuses on traditional academic abilities and rote learning rather than on communication, creative and critical thinking and the adaptability this engenders. These are the important future competencies. Could AI learning be more effective for educating people? Some suggest that rapid moves online hinder this goal, without the knowledge and skill to do this effectively. Others plan to make e-teaching and assessment the new normal. There must be more attention to information processing to make online experiences less stressful for participants, but the future is bright and there is always hope that we can do the right thing if we are aware of the negative issues!?

To sum up, AI ChatGPT models can bring more precision to natural language understanding, allowing relevant answers to user queries, although often uninspired. However, ChatGPT may offer limited-scope solutions to users and provide weak security protection. It is subject to inaccurate, bizarre and even dangerous responses at times, so users need to evaluate content carefully. If you train AIs with language from the internet then all the biases found there will be reflected in responses. Thus, it is likely that Tech companies will produce different models and people will use those confirming their world view. On the positive side, the Chat system saves time in gleaning a view of a new topic and provides a useful starting point to research. However, a survey by Resume Builder (2023) found 60% of people used chat to write CVs and application letters for jobs, with a noted rise in their quality by employers (PRNewswire, 2023). This has led to Monzo, the fintech company saying they will disqualify anyone using the AI chatbot for this activity, followed by others now that they realise what is happening. Teachers are definitely using it to save time writing their end of term reports. More than 1000 teachers have signed up to the “Real Fast Reports” (RFR) software launched by 2 former educators to save countless hours writing accounts. All users are required to do is put in a few points about a student to create a report. Apparently, some teachers in the past have written a good, medium and bad report for their class and assigned pupils to one of the three types, so RFR claim their model produces the “personal touch”. They also suggest that this is an essential tool for the many teachers who do not speak English as their first language or who are dyslexic. No wonder the standards of spoken and written language are in the decline according to those in their third age! Certainly, these developments question the idea of integrity, as parent campaign groups view this as cheating and short-changing their children. Life is complex today and standards and values very polarised, to produce continuing conflict and concern. Vigilance must always be on duty! We must ensure our values, regulation, knowledge and know-how are what guides us to use AI safely to benefit everyone.

REFERENCES: Abraham, D. (2022) A New Model of Workplace Learning, Ch.5 How World Events are Changing Education. Eds. R. Sage & R. Matteucci Brill Aca. Pub. Adams, N. (2022) Preparing for Work, C 4. How World Events are Changing Education. Eds. R. Sage & R. Matteucci. Brill Aca. Pub. Bratsberg, B. & Rogeberg, O. (2018) Flynn effect and its reversal are both environmentally caused. Proceedings of the National Academy of Sciences. 2018. National Library of Medicine. DOI:10.1078/pnas.1718793115, https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6042097/ Brooks, Rodney (1986), Intelligence without Representation, MIT Artificial Intelligence Laboratory Brooks, Rodney (2002) Flesh and Machines, Pantheon Books Chatterton, P. (2022) The Rise and Rise of Digital Learning in Higher Education. Ch.5. How the World is Changing Education. Eds. R. Sage & R. Matteucci. Published by Brill Acad. Pub. Copeland W., McGinnis E, Bai Y, Adams Z, Nardone H, Devadanam V & Hudziak J. (2021) Impact of COVID-19 pandemic on college student mental health and wellness. Journal of the American Academy of Child & Adolescent Psychiatry. 2021: 60(1):134–141. doi: 10.1016/j.jaac.2020.08.466. Cobello, S. & Mili, E. (2022) The Social Impact of Education Robotics. Ch.13. How World Events are Changing Education. Eds. R. Sage & R. Matteucci. Brill Aca. Pub. Dahmani, L. & Bohbot, V. (2020) Habitual use of GPS negatively impacts spatial memory during self-guided navigation. Scientific Reports. Volume 10, Article number: 6310 (2020) Digital Reports (2022) https://datareportal.com/reports/digital-2022-global-overview-report Ebner, J. (2022) University-School Partnerships. Ch.8. How World Events are Changing Education. Eds. R. Sage & R. Matteucci. Brill Flynn, J. (2012) Are We Getting Smarter? Rising IQ in the Twenty-first century. Cambridge. CUP. ISBN 978-1-107-60917-4 Frath, P. (2022) Chapter 9: Imaginative Alternatives to the Macabre Constant. Ch.9. How World Events are Changing Education. Eds. R. Sage & R. Matteucci. Brill Aca. Pub. Gazzaniga, M. |Ivry, R. & Mangun, G.R. (2019). Cognitive Neuroscience: The Biology of the Mind. W.W. Norton & Company Glendinning, I. (2022) Academic Integrity.Ch.11. How World Events are Changing Education. Eds. R. Sage & R. Matteucci. Brill Aca. Pub. Haidt, J. (2023)The Teenage Mental-Health Epidemic Began Around 2012. New York University tern.nyu.edu/experience-stern/faculty-research/teen-mental-illness-epidemic-began-around-2012 Higher Ed Partners (2023) https://higheredpartners.co.uk/why-online-learning-in-higher-education-is-here-to-stay-a-trends-assessment/ InfoQ (2020) OpenAI Announces GPT-3 AI Language Model with 175 Billion Parameters https://www.infoq.com/news/2020/06/openai-gpt3-language-model/ James, S. (2022) Prioritising Values. Ch.12.How World Events are Changing Education. Eds. R. Sage & R. Matteucci. Brill Aca. Pub. Johnston, N. (2023) Don’t Trust ChatGPT – It said I was a terrorist, says businessman. 16 April, 2023.p.10. DT.telegraph.co.uk Kaczmarek, B., & Stencel, M. (2018). Third Mode of Thinking. The New Educational Review 53(3) 285-96.doi:10.15804/tner.2018.53.3.24 Kobel, N. (2023) GPT-4 shouldn’t worry anybody – we just need to be better. ITPro.com Matteucci, R. (2022) Technology & COVID-19: Remote Learning & Flipped Classrooms. Ch.16. How World Events are Changing Education. Eds. R. Sage & R. Matteucci. Brill Aca. Pub. Matteucci, R. (2023) Technology and Learning. In Press McGregor, G. (2022) Additional Learning Needs: Hearing Development. Ch.14. How World Events are Changing Education. Eds. R. Sage & R. Matteucci. Brill Aca. Pub. Miller, C. (2022) Chip War: The Fight for the World’s Most Critical Technology. Pub. Simon Schuster UK Minsky, Marvin (1986) The Society of Mind. Simon & Schuster, p. 29 Moravec, Hans (1976) The Role of Raw Power in Intelligence, archived from original, 6 Sept. 2022 Moravec, Hans (1988) Mind Children. Harvard University Press Shulman, L. (1986). Those Who Understand: Knowledge Growth in Teaching. Educational Researcher, Vol. 15, No. 2 (Feb., 1986), pp. 4-14 Published by: American Educational Research Association. http://www.jstor.org/stable/1175860 Negus, E. (2022) Conversational Intelligence: The Basis of Creativity: Learning from Others. Ch. 7. How World Events are Changing Education. Eds. R. Sage & R. Matteucci. Brill Aca. Pub. Oppenheimer, R. (1965) The Trinity Test. Atomic Archives. USA Papenfuss, M. (2023) Creepy Microsoft Bing Chatbot Urges Tech Columnist To Leave His Wife. 17/03/2023/ https://www.google.com/search?q=ny+journalist+told+by+a+gpt+chat+to+leave+his+wife&oq=&aqs=chrome.0.35i39i362l8.1756973095j0j15&sourceid=chrome&ie=UTF-8 Pavese, C. (2021) Knowledge How. In: Edward N. Zalta (ed.) The Stanford Encyclopedia of Philosophy. Acc. 23/03/2023 https://plato.stanford.edu/archives/sum2021/entries/knowledge-how/ Pinker, Steven (2007) [1994]The Language Instinct, Perennial Modern Classics, Harper PR Newswire (2023) Survey Finds 6 in 10 Hiring Managers Have Received More High Quality Applications Since ChatGPT Launch. 4 Apri, 2023.l Resume Builder.com. https://www.benzinga.com/pressreleases/ Raine, L., Anderson, J. & Vogels, E. (2021) Experts Doubt Ethical AI Design Will Be Broadly Adopted as the Norm within the Next Decade. http//www.pewresearch.org Romero, A. (2021) Understanding GDP3 https://towardsdatascience.com/understanding-gpt-3-in-5-minutes-7fe35c3a1e52 Romero, J. (2022) The Forward. How World Events are Changing Education. Eds. R. Sage & R. Matteucci Brill Aca. Pub. Sage, R. (2000) Class Talk: Successful Learning through Effective Communication. London: Bloomsbury Sage, R. (2020) Speechless: Issues for Education. Buckingham. Buckingham University Press Sage, R. & Sage, L. (2020) A Thinking and Language Analysis of Primary Age Children in Four Schools. Report: The IFLT Trust, UK Sage, R. & Matteucci, R. (2022) Eds. How World Events are Changing Education. Brill Academic Publishers Sage, R., Sage, L. & Kaczmarek, B. (2023) A UK Study of Thinking and Language Expression. In Press for: The New Educational Review Susskind, D. (2020) A World Without Work: Technology, Automation and How We Should Respond. London: Allen Lane T.PACK available on http://matt-koehler.com/tpack2/hello-world/ Topping, K., Douglas, W., Robertson, D., Nancy Ferguson, N. (2022) Effectiveness of online and blended learning from schools: A systematic review. First published: 10 May 2022 https://doi.org/10.1002/rev3.3353. https://bera journals.onlinelibrary.wiley.com/doi/full/10.1002/rev3.3353 Webster, E. (2022) Creativity for Creativity. Ch. 6. How World Events are Changing Education. Eds. R. Sage & R. Matteucci, Brill Aca.P World Bank (2019). The Education crisis: Being in school is not the same as learning. Acc. 05/06/2022. From https://www.worldbank.org/en/news/immersive-story/2019/01/22/pass-or-fail-how-can-the-world-do-its-homew Xiang, C. (2023) Man Dies by Suicide after Talking with AI Chatbot, 30 March https://www.vice.com/en/article/pkadgm/man-dies-by-suicide-after-talking-with-ai-chatbot-widow-says. Acc. 1/04/2023